When the AI Invoice Arrives Before the Governance

The pilot looked harmless: a small team, a clever AI assistant, a fast turnaround.

Then the invoice arrived—three different subscriptions for the same tool, bought on three different cards.

Two weeks later, Legal asked where the prompts were stored.

A month later, the CISO asked who approved the vendor’s sub-processors.

Nobody had done anything “wrong.” We had simply moved faster than our governance muscles could handle.

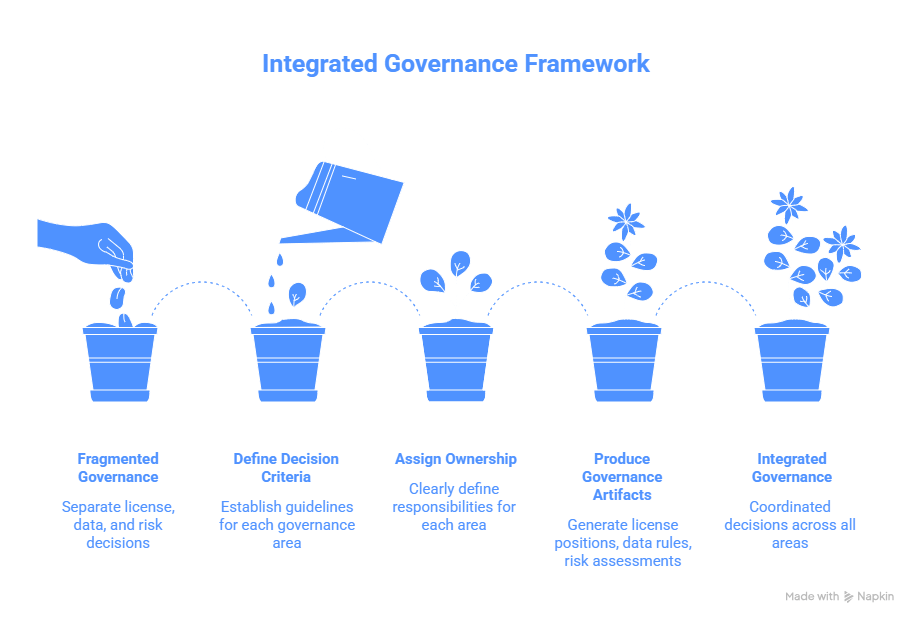

One Integrated Decision for Licenses, Data, and Risk

AI tools don’t fail enterprises because the models are “too smart.” They fail because organizations govern them in pieces: Procurement negotiates price, IT approves access, Security worries about data, Legal reads clauses, and the business just wants results. Each function does its job—yet the overall system still leaks cost, data, and accountability.

The principle is simple: govern licenses, data, and risk as one decision. Not as three parallel checklists, and not as a late-stage compliance tax. One integrated decision means you know (1) what you bought and how you measure value, (2) what data flows through it and what must never touch it, and (3) what risks you accept—with explicit owners and expiry dates. This is not bureaucracy. It’s how you keep autonomy without creating chaos.

Trust + Directness—The Week We Paused to Keep Moving

We rolled out an AI writing tool for a Nordic team and an India delivery team.

The Nordic product owner sent a short pre-read: “Use cases, allowed data, success measure.”

In India, we started quickly—people copied real customer text into the tool to get better drafts.

Nobody meant harm; it was just “doing the job well” under time pressure.

In the next steering meeting, the Nordic owner asked calmly, “Which data did we test with?”

Silence. Not defensive—just the uncomfortable kind where adults realize they missed a basic step.

Security joined the call, also calm, and asked for one thing: a data boundary written down.

The turning point was not a scolding. It was trust plus directness: “We fix it, then we continue.”

We paused rollout for one week, set rules, trained two champions, and restarted.

Adoption recovered. Confidence improved. And no one needed a hero speech—just a clear system.

Autonomy Needs Boundaries, Speed Needs Memory

Nordic and Indian teams often share the same intent: deliver quality, protect the company, respect people’s time. The friction usually comes from different default assumptions about autonomy and safety.

In many Nordic environments, autonomy is granted early and maintained through clarity: people expect boundaries to be explicit, decisions to be documented, and risks to be discussed without drama. A pre-read is not paperwork; it’s respect. Direct questions (“Who approved this?” “Where is the data stored?”) are not personal attacks; they are a normal way to keep the system healthy.

In many Indian delivery environments, autonomy is often earned through results under constraint: speed, responsiveness, and “making it work” are celebrated, especially when requirements are moving. If guidance is missing, teams will improvise—because waiting can feel like failure. That improvisation can be brilliant for delivery, but dangerous for AI tools, where a single hidden data flow can become a long-term liability.

The fix is not “be more Nordic” or “be less Indian.” The fix is shared operating language:

- Autonomy needs a boundary. Teams move fast within a defined safe zone.

- Directness needs psychological safety. Questions about risk are welcomed, not punished.

- Speed needs a memory. Decisions are logged so you don’t re-learn the same lesson every quarter.

Studies suggest that teams perform better when expectations are explicit and cognitive load is reduced—especially in high-ambiguity work. AI tools increase ambiguity (what counts as “data”? what counts as “output”? what counts as “approval”?). So the leadership task is to make the invisible visible—once—so execution can stay fast.

The Integrated Governance Model in One View

| One integrated decision | License governance (cost & value) | Data governance (what flows) | Risk governance (what we accept) |

| What we decide | Who can use which SKU, for what use cases | What data is allowed/prohibited, retention, and storage location | Security, legal, and operational risks; mitigations; expiry date |

| What we produce | License position, renewal plan, usage telemetry | Data classification rules + approved workflows | Risk assessment with owner + residual risk statement |

| Who owns | Procurement + SAM + Business owner | Data owner + IT + Security | Security + Legal + Business owner |

| What “good” looks like | No duplicate buys; adoption matches spend | No sensitive data in prompts; clear exception handling | Faster approvals with fewer surprises at renewal |

8 Practices You Can Implement Next Week

- Start with a use-case map, not a vendor name.

List the 5–10 AI use cases people actually want (meeting notes, code assistance, RFP drafting, knowledge search, customer support). Then select tools and controls based on use cases. This prevents “tool-first” chaos and reduces duplicate spend. - Define a “safe-to-use zone” for data in one page.

Keep it human-readable: what’s allowed, what’s not allowed, and examples. Most issues come from ambiguity, not malice. Make the safe zone easy to follow under pressure. - Create one intake gate—but make it fast.

A lightweight approval path beats underground adoption. Aim for a single form and a single triage meeting where Procurement, Security, and IT can say “yes, yes-with-conditions, or no.” If the gate is slow, people route around it. - Instrument usage like you instrument any product.

Treat AI tooling as a service: track active users, feature adoption, and outcomes. License governance becomes simpler when you can see who uses what and why. It also strengthens negotiations because you know where value is real versus imagined. - Align contract clauses to your data boundaries.

Your policy is only as strong as the agreement. Ensure the contract matches your rules on data retention, training on customer data, sub-processors, audit rights, and incident notification. This is where Procurement and Security stop being “separate worlds.” - Use champions on both sides of the globe.

Nominate a Nordic champion and an India champion per major tool. Their job is to translate intent into day-to-day behavior: how to write prompts safely, how to escalate exceptions, how to share learnings without shaming. - Run a renewal pre-read at month 9, not month 11.

Most AI contracts renew before you’ve measured anything meaningful. A month-9 pre-read forces reality: adoption, outcomes, risks, and whether you’re paying for hope. It also reduces late-stage panic and rushed approvals. - Log decisions and revisit them on purpose.

AI tools evolve quickly; your decision last year may not fit this year. Keep a decision log with owners and expiry dates. That way changing your mind is a sign of maturity, not inconsistency.

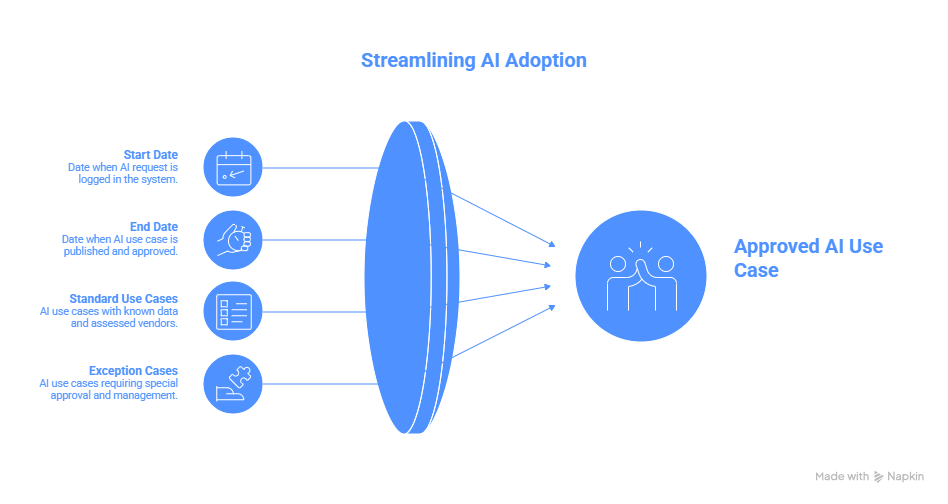

2–4 Weeks to an Approved, Governed Use Case (and Why It Matters)

Metric (range): 2–4 weeks “Time-to-Approved Use Case”

What it represents: the time from a business request (“We want this AI tool for X”) to an approved, governed use case that teams can adopt without guesswork.

How a leader measures it:

- Start date: when the request is logged (ticket/form/email captured into the intake system).

- End date: when the approved use case is published (with data boundary + access model + owner).

- Track separately for “standard” use cases vs “exception” cases.

What “good” looks like:

- 2–4 weeks for standard use cases with known data classes and a vendor already assessed.

- Exceptions may take longer, but the key is predictability: clear reasons, clear owners, and a clear next step.

Why it matters: if this cycle time is too long, shadow AI will grow. If it’s too short without controls, risk will compound quietly.

Optional companion measure (used internally by many strong teams): the 15–25% “duplicate spend reduction” over 6–12 months after implementing centralized intake + usage telemetry. You measure it by reconciling purchases across corporate cards, expense claims, and procurement systems, then comparing baseline versus post-governance duplication.

Safe Speed as the Default Operating Model

Enterprise AI is no longer a “tool choice.” It’s an operating model choice.

Teams will adopt what helps them think and move faster—whether governance is ready or not.

So the job is not to slow people down; it’s to make safe speed the default.

When licenses, data, and risk are governed together, you stop treating each rollout like a special case.

You build a repeatable pathway: clear boundaries, calm accountability, and measurable value.

That’s what earns trust in Nordic environments—and what protects momentum in India delivery realities.

The most mature organizations aren’t the ones with the most AI experiments.

They’re the ones that can scale the right experiments without creating invisible debt.

“Governance is how autonomy survives contact with reality.”

“Speed is a gift—until it has nowhere safe to land.”

Leave a comment